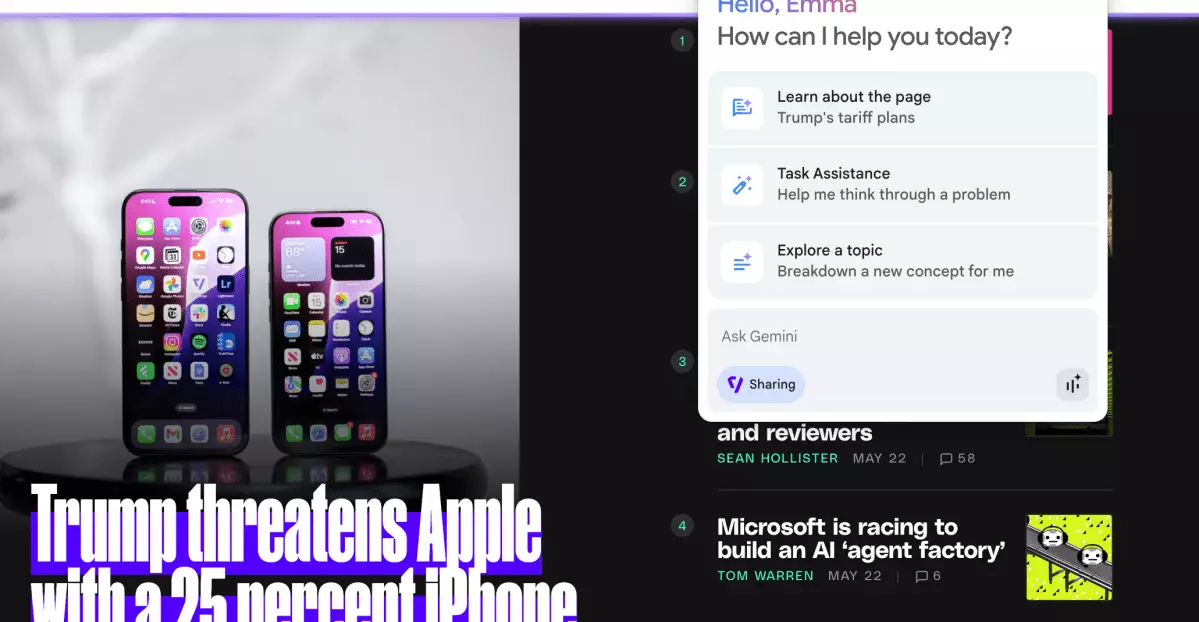

In an era where efficiency and multitasking become synonymous with productivity, Google’s latest innovation, Gemini, takes a bold leap in integrating artificial intelligence directly into your web browsing experience. As someone who regularly navigates a myriad of online information, discovering Gemini’s capabilities has been an eye-opening experience. The seamlessness of having an AI assistant right within Chrome provides immense potential that, though in its nascent stages, hints at a far more agentic future of personal computing.

Gemini isn’t just a chatbot; it’s a companion for your digital journey. With an enticing interface nestled conveniently in the top-right corner of Chrome, the conventional confines of virtual assistance are expanded. Gone are the days of switching apps to query information; Gemini allows users to interact with their browsing environment directly, raising the question: how far can this new integration truly go?

A Tangible Interaction Between AI and Information

The initial feeling when using Gemini is that of empowerment—akin to having a knowledgeable friend accompany you as you sift through articles and videos. It’s equipped to assist with a variety of tasks, from summarizing complex articles to pulling gaming news directly from websites. Personally, I found its ability to capture relevant data from streaming sites exhilarating. For example, with a click of a button, I learned about Nintendo’s additions to their Game Boy catalog or details regarding major updates to gaming hardware without the need for extensive scrolling or searching.

However, the technology isn’t without its limitations. Gemini can only interpret what it “sees” on a single screen at a time, which means if you desire a summary of a section lurking below the fold—say, the comment threads—it requires that visibility beforehand. This limitation highlights the balancing act between an AI’s prowess and our behavioral patterns, urging us to adapt to maximize its efficacy.

Speech Recognition: An Innovative Leap

One of the standout features of Gemini is its voice interaction capability, allowing for a hands-free experience that can be particularly valuable while watching instructional content. Imagine being able to pause a complex DIY video and simply vocalize, “What tool is being used?” The rapid response that identifies tools like a nail gun can be a game changer for DIY enthusiasts and hobbyists alike. However, while this feature showcases Gemini’s impressive speech recognition abilities, it does also reveal an ongoing challenge: the accuracy of information relay often hinges on the presence of properly tagged content in media.

Navigating through unlisted chapters in a video can sometimes lead to miscommunication and incorrect responses. For those relying on these features to enhance their learning experiences, such discrepancies may prove frustrating. Yet the potential is profound; I found it especially gratifying when Gemini effortlessly pulled recipes from cooking videos. This integration could significantly alter how we learn and engage with content online.

Inconsistencies and Room for Improvement

Despite the excitement surrounding Gemini, there were instances of underwhelming performance. For example, while searching for real-time information or specific product listings, I encountered moments of ambiguity where Gemini lacked the data to deliver an answer. It often pointed out its own limitations rather than offering alternatives or suggestions to make the navigation easier. Such occurrences may lead users to question the assistant’s utility in practical scenarios, particularly in an environment that increasingly demands immediacy and precision from technology.

Moreover, some of Gemini’s responses felt disproportionately lengthy for a compact interface. While detail is appreciated, a balance must be found to ensure interactions remain efficient and informative, effectively fulfilling the promise of saving users time rather than consuming it.

The Road Ahead: Embracing an Agentic AI

Despite the hiccups, it is clear that Google has ambitious plans for Gemini. The concept of making its AI ‘agentic,’ capable of performing proactive evaluations and actions, nudges us toward a future where it can autonomously fulfill simple tasks on our behalf. Visualizing a room where you could use Gemini to summarize restaurant menus and even place an order autonomously is not far-fetched anymore; it’s a glimpse of what could soon be realized.

The whispers of Project Mariner’s “Agent Mode” promise a remarkable evolution for Gemini, hinting at an ambitious landscape where the assistant could juggle multiple tasks and research needs simultaneously. Such capabilities signal an incoming wave of personal productivity tools that seamlessly meld human intention with AI execution, changing the way we interact with the digital world.

Gemini’s early integration into Chrome may feel like a fledgling spark in a much larger innovation, yet its promising features and intuitive design reveal a future where our digital engagements become not only more intelligent but exponentially more efficient.